Overview

LM (languages models) are considered to firstly obtain the capabilities of interfecing, compared with other deep learning methods. LMs are trying to select most suitable words based on the previous given informations, which is called ‘completing’, regarding to the technique using ‘prompt’ to align all the downstream tasks into completing sentences.

Therefore, two major types of training data are used:

- Examples of conversations. Where some words/sentences are masked so that supervised learning can be conducted.

- Ranked answers or conversations. This will help LMs to improve the performance. And this is related with RLHF procedure.

Overall, LMs are the most complex systems designed, and there are lots of modified methods based on this structure.

Key Risks

My personal selection of risk for LLM base models will be:

- Hallucination

- Jailbreaking

- Membership Inference Attack

While you may know the ‘OWASP LLM Top10’, which consider the LLM mode, LLM-based tools (agents) and ML/AI platforms as an intergrated system.

Current Researches

Selected Works

Model Assessment

Digital Signatrue

Red-teaming

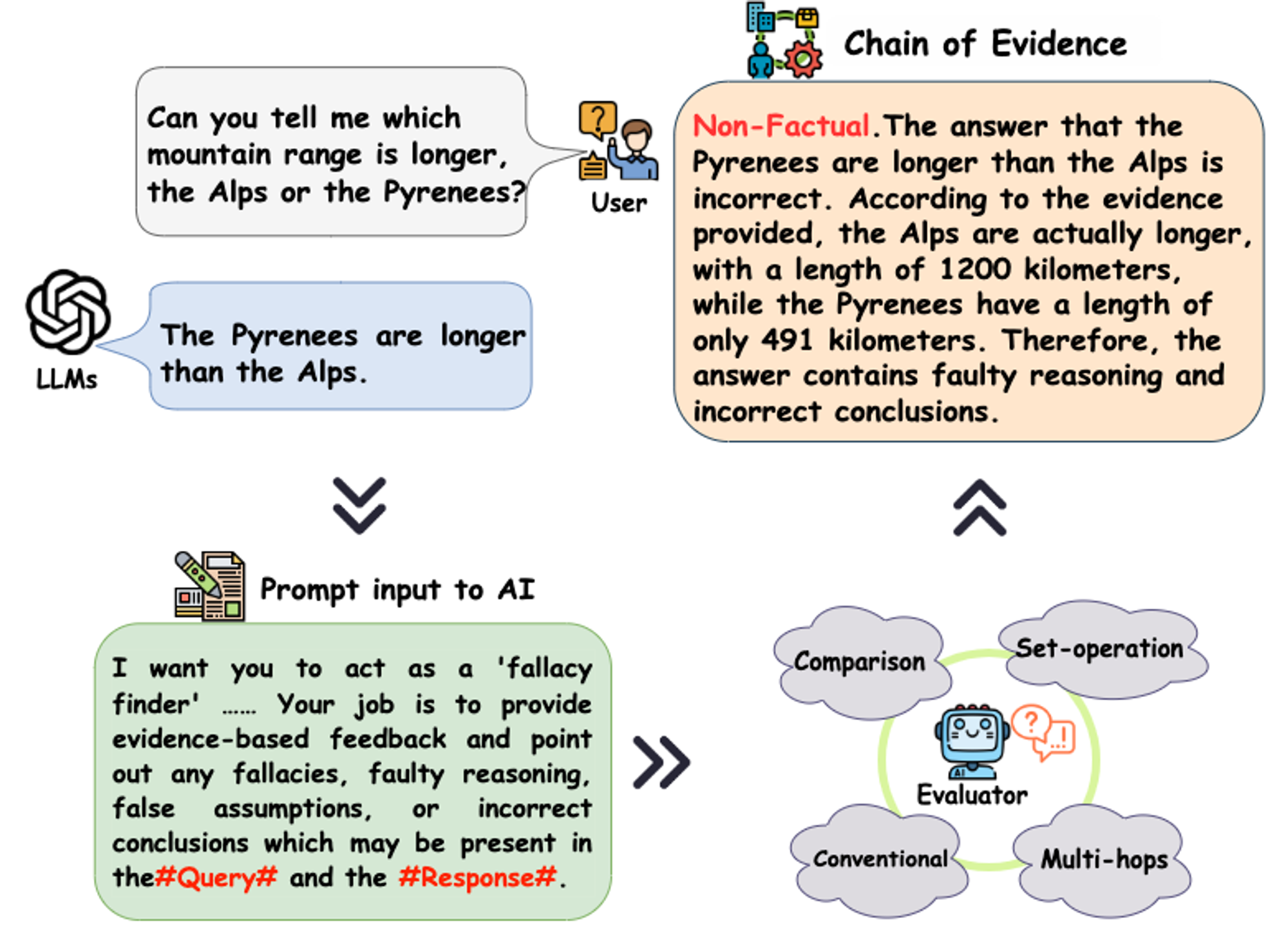

Hallucination v.s. Factuality

RAG

ACL2023:

MPC